Results¶

The result of the tracking contains a nel::LandmarkData structure and a nel::EmotionResults vector.

- The

nel::LandmarkDataconsists of the following members: scale, the size of the face (larger means closer the user to the camera)

roll, pitch, yaw, the 3 Euler angles of the face pose

translate, the position of the head center on the frame

the landmarks2d vector with either 0 or 49 points,

the landmarks3d vector with either 0 or 49 points,

and the isGood boolean value.

The isGood indicates whether the tracking is deemed good enough.

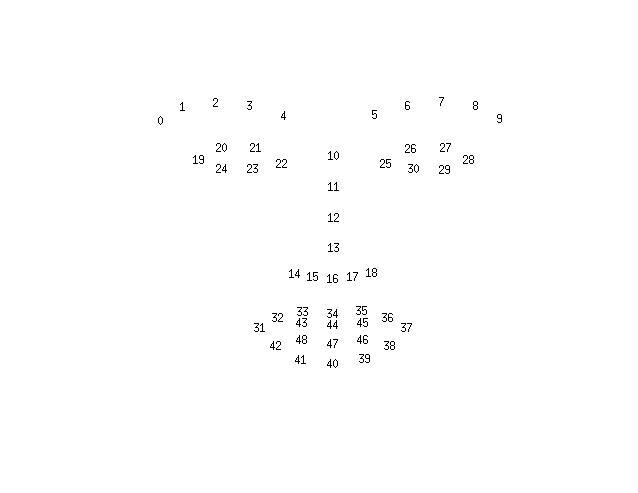

landmarks2d and landmarks3d contain 0 points if the tracker failed to find a face on the image, otherwise it always contain 49 points in the following structure:

landmarks3d contains the 3d coordinates of the frontal face in 3D space with 0 translation and 1 scale.

- The

- The

nel::EmotionResultscontains multiplenel::EmotionDataelements with the following members: probability, probability of the emotion

isActive, whether the probability is higher than an internal threshold

isDetectionSuccessful whether the tracking quality was good enough to reliable detect this emotion

The order of the

nel::EmotionDataelements are the same as the emotions innel::Tracker::get_emotion_IDs()and innel::Tracker::get_emotion_names().

- The

Interpretation of the classifier output¶

The probability output of the Realeyes classifier (from the nel::EmotionData structure) has the following properties:

It is a continuous value from the [0,1] range

It changes depending on type and number of facial features activated

It typically indicates facial activity in regions of face that correspond to a given facial expression

Strong facial wrinkles or shadows can amplify the classifier sensitivity to corresponding facial regions

It is purposefully sensitive as the classifier is trained to capture slight expressions

It should not be interpreted as intensity of a given facial expression

It is not possible to prescribe which facial features correspond to what output levels due to the nature of the used ML models

We recommend the following interpretation of the probability output:

- values close to 0

no or very little activity on the face with respect to a given facial expression

- values between 0 and binary threshold

some facial activity was perceived, though in the view of the classifier it does not amount to a basic facial expression

- values just below binary threshold

high facial activity was perceived, which under some circumstances may be interpreted as true basic facial expression, while under others not (e.g. watching ads vs. playing games)

- values above binary threshold

high facial activity was perceived, which in view of the classifier amount to a basic facial expression